Deploy Ollama

A powerful and user-friendly platform for running LLMs

Ollama

Just deployed

/root/.ollama

Open WebUI

Just deployed

/app/backend/data

Deploy and Host Ollama on Railway

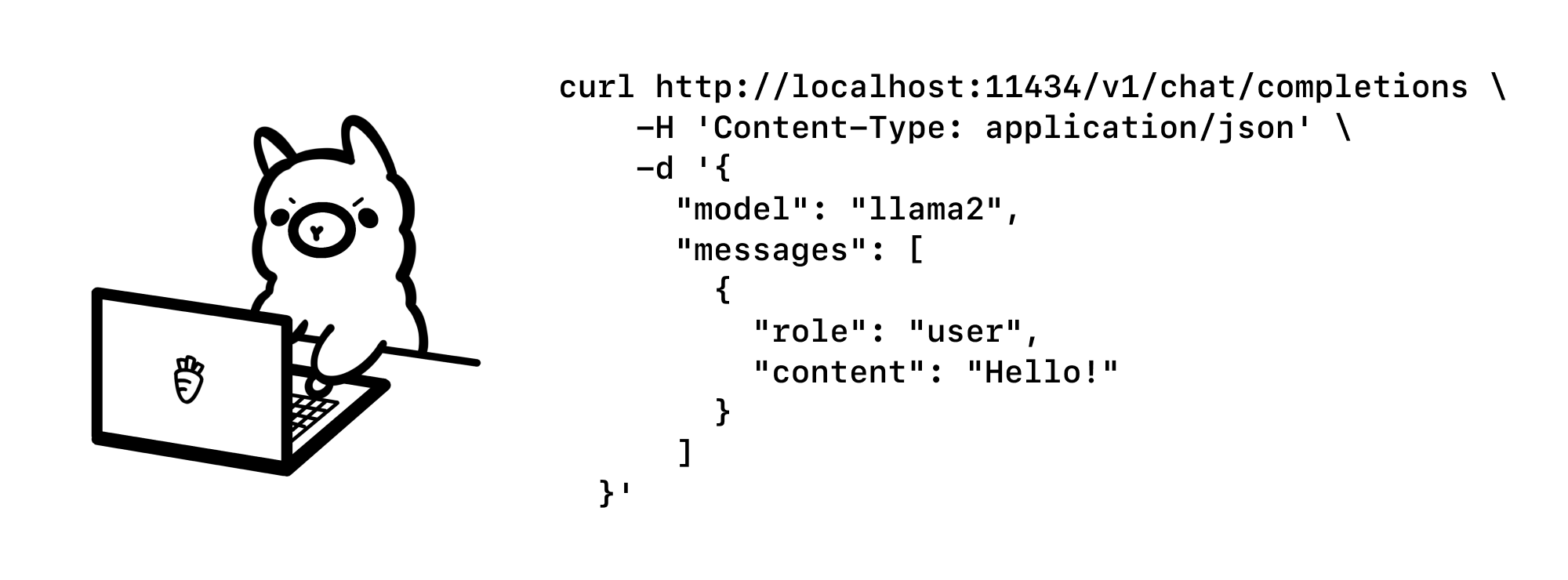

Ollama is a platform designed for deploying and managing AI models. It provides a user-friendly interface for integrating AI into applications, offering tools to streamline the deployment process. Ollama supports various model types and allows customization to fit specific needs. Its flexible architecture ensures scalability and efficiency, making it suitable for both small projects and large-scale implementations.

About Hosting Ollama

Hosting Ollama means running an AI model deployment platform that manages model downloads, inference serving, and API endpoints for AI model access. The platform handles model storage, memory management for large language models, and API request processing while maintaining model availability for applications. Production deployment requires managing disk space for model storage, configuring memory allocation for model inference, handling concurrent API requests, and maintaining model version management. Railway simplifies Ollama deployment by providing persistent storage for models, managing container resources for inference workloads, handling API routing, and offering optional web interface integration for user-friendly model configuration.

ℹ️ Optional Feature: This template also includes an optional user-friendly web interface to configure Ollama, visit their repository for related assistance.

Common Use Cases

- AI Model Deployment: Deploy and manage various AI models with user-friendly interfaces for application integration

- Scalable AI Infrastructure: Build scalable AI solutions suitable for both small projects and large-scale implementations

- Custom AI Applications: Integrate AI models into applications with customizable deployment configurations

Dependencies for Ollama Hosting

The Railway template includes the required runtime environment and Ollama platform with pre-configured model management and optional web interface.

Deployment Dependencies

Implementation Details

Available Models:

Ollama has over 100 models across various parameter sizes. The list can be found on ollama.com/library.

Documentation:

The official Ollama documentation can be found on the repository. Blog posts and release information can be found in the blog.

Platform Features:

- Model Management: Deploy and manage various AI model types with flexible configuration options

- User-friendly Interface: Optional web interface for simplified Ollama configuration and management

- Flexible Architecture: Scalable and efficient architecture suitable for different project sizes

- Integration Tools: Streamlined deployment process for integrating AI into applications

- Customization Support: Allow customization to fit specific application needs and requirements

License:

Ollama is distributed under the MIT License.

Web Interface Integration:

The template includes optional Open WebUI integration for enhanced user experience in configuring and managing Ollama deployments through a web-based interface.

Why Deploy Ollama on Railway?

Railway is a singular platform to deploy your infrastructure stack. Railway will host your infrastructure so you don't have to deal with configuration, while allowing you to vertically and horizontally scale it.

By deploying Ollama on Railway, you are one step closer to supporting a complete full-stack application with minimal burden. Host your servers, databases, AI agents, and more on Railway.

Template Content

Ollama

ollama/ollamaOpen WebUI

ghcr.io/open-webui/open-webui