Deploy FlowiseAI (Visual LLM Workflow Builder)

Flowise, Visual LLM Flow Builder (OpenPipe Alternative), Self Host [Sep'25]

flowise

flowiseai/flowise

Just deployed

/data

Postgres

railwayapp-templates/postgres-ssl:16

Just deployed

/var/lib/postgresql/data

Deploy and Host Managed Flowise AI Service with one click on Railway

Flowise AI is an open-source visual LLM flow builder, available on GitHub as "flowiseai/Flowise," enabling customizable AI workflows with ease.

About Hosting FlowiseAI on Railway (Self Hosting Flowise on Railway)

Self hosting Flowise empowers you to run the platform entirely on your own infrastructure, meaning all your data and configurations stay fully under your control, no third-party servers involved. With the option for flowise self hosting, you can deploy effortlessly on modern cloud platforms; for example, hosting Flowise AI on Railway provides a seamless, scalable way to manage your visual AI workflows while guaranteeing privacy and security.

Why Deploy Managed Flowise AI Service on Railway

Deploying Flowise on Railway offers a seamless, single-click experience, making it the ideal platform to self host FlowiseAI without complex setup. Railway’s intuitive interface, automated scaling, and robust infrastructure simplify the management of your Flowise instance, ensuring reliability and quick deployment for both beginners and advanced users.

Railway vs DigitalOcean:

While DigitalOcean requires manual server setup, maintenance, and backup management, Railway lets you deploy Flowise instantly with zero sysadmin effort and built-in scaling.

Railway vs Linode:

Linode demands hands-on security patches and storage configuration, but Railway provides automated updates, secure containers, and an intuitive interface for hassle-free Flowise hosting.

Railway vs Vultr:

With Vultr, you must handle disk management, environment setup, and performance tuning, whereas Railway automates these tasks and offers one-click deployment for Flowise.

Railway vs AWS Lightsail:

AWS Lightsail involves complex networking, manual scaling, and OS upkeep, but Railway simplifies all of this, delivering seamless, fast Flowise deployments and worry-free scaling.

Railway vs Hetzner:

Hetzner offers power and price, but expects you to manage every detail of server ops; Railway’s managed platform eliminates this burden, focusing on security and rapid Flowise deployment.

Common Use Cases

Here are 5 common use cases for Flowise:

- Multi Agent Flowise AI: Orchestrate multiple intelligent agents for research, data analysis, or workflow automation within complex business processes.

- Chat Assistants using FlowiseAI: Build custom chatbots or virtual assistants that leverage FlowiseAI for dynamic customer support and lead generation.

- Human in the Loop: Design workflows that integrate human review and intervention alongside automated AI systems for better accuracy and compliance.

- RLHF (Reinforcement Learning from Human Feedback): Easily implement RLHF pipelines to fine-tune AI models with real-time user feedback using Flowise’s visual interface.

Dependencies for Flowise AI hosted on Railway

Flowise depends on Node.js, npm (or yarn), and a database like SQLite or PostgreSQL for core functionality. Docker and proper environment variable configuration are also recommended for easy and reliable deployment. Using this template, you can self host in a single click.

Deployment Dependencies for Managed Flowise AI Service

For production-ready and seamless deployments, Docker is recommended to ensure consistency across environments, and setting up environment variables (e.g., API keys) is essential for integrating external AI services.

Implementation Details for Flowise AI

When deploying Flowise on Railway, simply connect the Flowise GitHub repository, set essential environment variables like PORT, DATABASE_URL, and your API keys, and Railway takes care of the build and deployment process. Remember to define FLOWISE_USERNAME and FLOWISE_PASSWORD to secure access to your Flowise instance, only authorized users can manage your workflows.

How does Flowise look against other visual LLM flow builders (Alternatives to Flowise AI)

Flowise vs Langflow

Flowise and Langflow both offer visual LLM workflow builders, but Flowise emphasizes a more flexible drag-and-drop interface with quick integrations for chatbots and multi-agent systems, while Langflow often focuses on rapid prototyping and prompt chaining.

Flowise vs OpenPipe

Flowise enables visually designing diverse AI workflows, suitable for non-coders and teams, whereas OpenPipe is tailored for prompt engineering, LLM evaluation, and quick model deployment, with less emphasis on broad workflow design.

Flowise vs PromptFlow

Flowise provides a platform-agnostic canvas to build and orchestrate AI flows, whereas PromptFlow (by Azure ML) is tightly integrated with Azure’s ecosystem, offering advanced ML pipeline management but requiring Azure services.

Flowise vs Haystack

Flowise focuses on intuitive low-code AI workflow creation, ideal for chatbots and assistants; Haystack, on the other hand, specializes in end-to-end NLP pipelines, document retrieval, and search applications, mostly for developers.

Flowise vs Helicone

Flowise is a comprehensive workflow builder for multi-step AI processes, while Helicone specializes in logging, monitoring, and analytics for LLM usage, making it more of an observability and prompt management tool than a workflow designer.

How to use Flowise?

- Start using Flowise by installing it from the official GitHub repository, then run the application locally or on a platform like Railway.

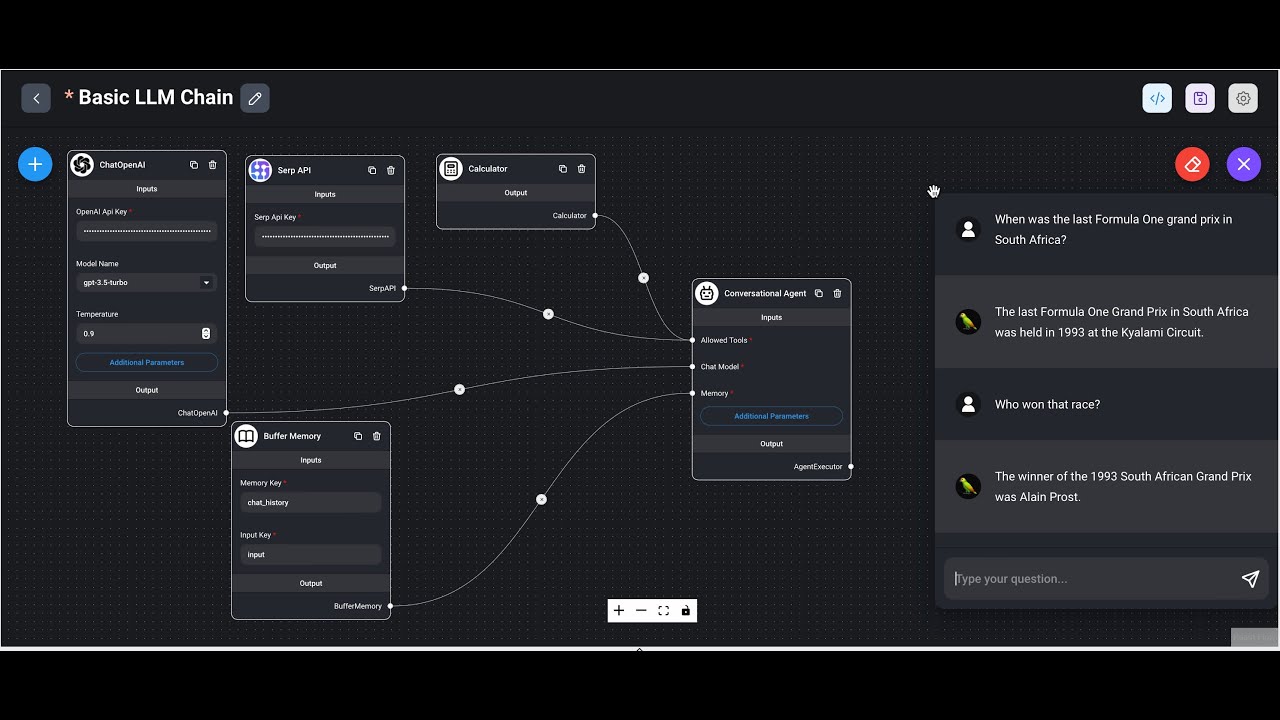

- Access the intuitive Flowise UI in your browser, where you can visually design workflows using the drag-and-drop flowise builder.

- Build with Flowise by connecting LLM nodes, API connectors, and custom logic to create chat assistants, multi-agent processes, or data pipelines.

- Save, export, and test your flows directly within the Flowise interface for quick iteration and deployment.

- Using Flowise, even non-coders can rapidly create and modify powerful AI-driven applications with minimal setup.

How to self host Flowise on other VPS?

Clone the Repository

Download Flowise from GitHub using git clone https://github.com/FlowiseAI/Flowise.git.

Install Dependencies

Navigate to the project folder and install required packages using npm install or yarn install.

Configure Environment Variables

Set up environment variables such as PORT, DATABASE_URL, FLOWISE_USERNAME, and FLOWISE_PASSWORD. Include your API keys if you plan to connect LLM providers.

Start Flowise

Run npm run start or use Docker for a containerized setup (docker-compose up -d).

Access the Flowise UI

Open your browser and navigate to http://localhost:3000 (or your specified port) to use the Flowise builder and manage workflows.

With Railway, you can self host in a single click. Deploy Now!

Features of Flowise

- Visual Flow Builder: Drag-and-drop interface for creating AI workflows without coding expertise.

- Multi-Agent Support: Orchestrate multiple AI agents to work together on complex tasks and processes.

- LLM Integration: Connect with popular language models like OpenAI GPT, Anthropic Claude, and local models.

- Chat Interface: Built-in chat functionality for testing and deploying conversational AI applications.

- Human-in-the-Loop: Incorporate human review and feedback directly into automated workflows.

- Memory Management: Persistent conversation memory and context management for improved interactions.

- API Integration: Connect external services and APIs to enhance workflow capabilities.

Official Pricing of Flowise Cloud service

Flowise offers a free plan with limited usage, including 2 flows and 100 predictions per month. The Starter plan costs $35/month and is suitable for individuals, offering unlimited flows and 10,000 predictions per month. The Pro plan is $65/month, targeting businesses with up to 50,000 predictions/month, more storage, unlimited workspaces, and user management. [Updated Sep’25]

Self hosting Flowise AI vs Flowise Paid Plans

Most core features are available in both the self-hosted and paid hosted plans since Flowise is open source. However, paid hosted plans provide fully managed infrastructure, priority support, unlimited workspaces, seamless scaling, and premium features like admin roles, permissions, and advanced storage, all with zero maintenance burden. With self-hosting, you maintain maximum flexibility and data sovereignty, but those additional managed features require the paid subscription.

Monthly cost of Self hosting Flowise AI on Railway

Hosting Flowise on Railway typically costs $5/month for the Hobby plan or $20/month for the Pro plan, covering most small to medium self-hosted projects. Prices may increase slightly based on extra usage.

FAQs

What is Flowise AI and why use Railway?

Flowise AI is a visual LLM flow builder; Railway enables instant, managed cloud deployment with no manual setup.

How do I deploy Flowise on Railway?

Connect the GitHub repo, set environment variables, and deploy in one click using Railway’s template.

What are the main requirements?

You need Node.js, npm, a database (SQLite/PostgreSQL), Docker (recommended), and environment variables.

Is self-hosting on Railway secure?

Yes, your data stays private, and user access is protected with usernames and passwords.

How does Flowise compare to alternatives?

Flowise offers flexible drag-and-drop AI builder; alternatives may focus more on prompt chaining or vendor lock-in.

What’s the cost of self-hosting vs paid plans?

Self-hosting on Railway is $5–20/month, while paid Flowise Cloud starts at $35/month with premium features.

Template Content

flowise

flowiseai/flowiseFLOWISE_PASSWORD

Password for first time Account Creation

FLOWISE_USERNAME

Username for first time Account Creation