Deploy Laminar AI

Open-source platform for tracing and evaluating AI applications.

PostgreSQL

railwayapp-templates/postgres-ssl:latest

Just deployed

/var/lib/postgresql/data

Laminar AI - Frontend2

lmnr-ai/lmnr

Just deployed

ClickHouse

clickhouse/clickhouse-server

Just deployed

/var/

Laminar AI - Frontend

lmnr-ai/frontend

Just deployed

query-engine

lmnr-ai/query-engine

Just deployed

RabbitMQ

rabbitmq

Just deployed

Laminar AI - Server

lmnr-ai/app-server

Just deployed

Deploy and Host Laminar AI on Railway

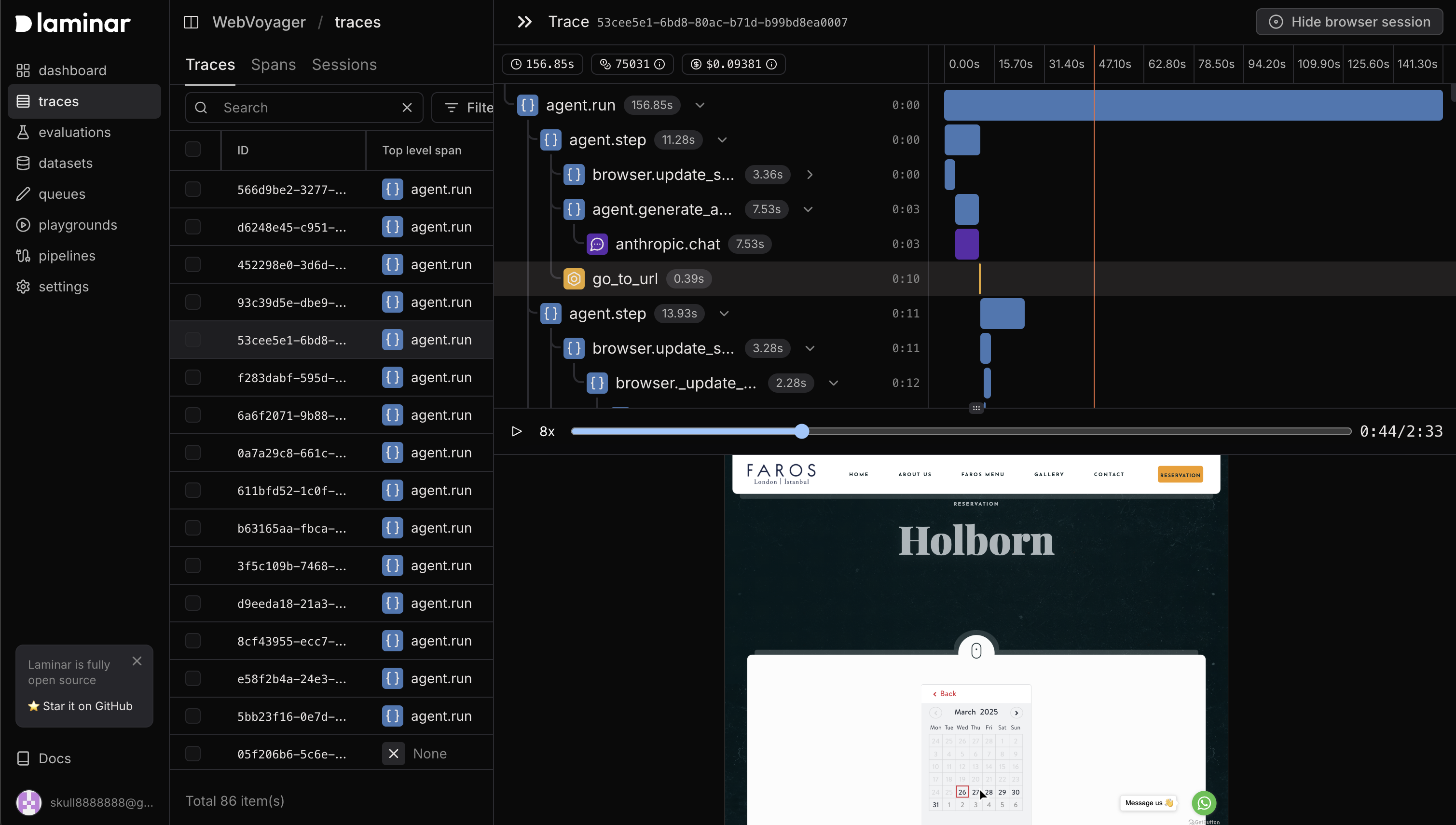

Laminar is the open-source platform for tracing and evaluating AI applications.

- Tracing

- OpenTelemetry-based automatic tracing of common AI frameworks and SDKs (LangChain, OpenAI, Anthropic ...) with just 2 lines of code. (powered by OpenLLMetry).

- Trace input/output, latency, cost, token count.

- Function tracing with

observedecorator/wrapper. - Image tracing.

- Evals

- Run evals in parallel with a simple SDK

- Datasets

- Export production trace data to datasets.

- Run evals on hosted datasets.

- Built for scale

- Written in Rust 🦀

- Traces are sent via gRPC, ensuring the best performance and lowest overhead.

- Modern Open-Source stack

- RabbitMQ for message queue, Postgres for data, Clickhouse for analytics.

- Dashboards for statistics / traces / evaluations / tags.

About Hosting Laminar AI

- Luminar AI (Frontend) – Next.js frontend and backend

- Luminar AI (Server) – core Rust backend

- RabbitMQ – message queue for reliable trace processing

- PostgreSQL – Postgres database for all the application data

- ClickHouse – columnar OLAP database for more efficient trace and tag analytics

Common Use Cases

- Tracing

- Evaluations

- Labeling

Dependencies for Laminar AI Hosting

- RabbitMQ – message queue for reliable trace processing

- PostgreSQL – Postgres database for all the application data

- ClickHouse – columnar OLAP database for more efficient trace and tag analytics

Contributing

For running and building Laminar locally, or to learn more about docker compose files, follow the guide in Contributing.

Before Start

You will need to properly configure the SDK, with baseUrl and correct ports. See https://docs.lmnr.ai/self-hosting/setup

First, create a Project and generate a project API key.

TS quickstart

npm add @lmnr-ai/lmnr

It will install Laminar TS SDK and all instrumentation packages (OpenAI, Anthropic, LangChain ...)

To start tracing LLM calls just add

import { Laminar } from '@lmnr-ai/lmnr';

Laminar.initialize({ baseUrl: "", projectApiKey: process.env.LMNR_PROJECT_API_KEY });

To trace inputs / outputs of functions use observe wrapper.

import { OpenAI } from 'openai';

import { observe } from '@lmnr-ai/lmnr';

const client = new OpenAI({ apiKey: process.env.OPENAI_API_KEY });

const poemWriter = observe({name: 'poemWriter'}, async (topic) => {

const response = await client.chat.completions.create({

model: "gpt-4o-mini",

messages: [{ role: "user", content: `write a poem about ${topic}` }],

});

return response.choices[0].message.content;

});

await poemWriter();

Python quickstart

pip install --upgrade 'lmnr[all]'

It will install Laminar Python SDK and all instrumentation packages. See list of all instruments here

To start tracing LLM calls just add

from lmnr import Laminar

Laminar.initialize(base_url="", project_api_key="")

To trace inputs / outputs of functions use @observe() decorator.

import os

from openai import OpenAI

from lmnr import observe, Laminar

Laminar.initialize(project_api_key="")

client = OpenAI(api_key=os.environ["OPENAI_API_KEY"])

@observe() # annotate all functions you want to trace

def poem_writer(topic):

response = client.chat.completions.create(

model="gpt-4o",

messages=[

{"role": "user", "content": f"write a poem about {topic}"},

],

)

poem = response.choices[0].message.content

return poem

if __name__ == "__main__":

print(poem_writer(topic="laminar flow"))

Running the code above will result in the following trace.

Client libraries

To learn more about instrumenting your code, check out our client libraries:

Why Deploy Laminar AI on Railway?

By deploying Laminar AI on Railway, you are one step closer to supporting a complete full-stack application with minimal burden. Host your servers, databases, AI agents, and more on Railway.

Deployment Dependencies

none

Template Content

Laminar AI - Frontend2

lmnr-ai/lmnrOPENAI_API_KEY

ClickHouse

clickhouse/clickhouse-serverLaminar AI - Frontend

ghcr.io/lmnr-ai/frontendOPENAI_API_KEY

query-engine

ghcr.io/lmnr-ai/query-engineRabbitMQ

rabbitmqLaminar AI - Server

ghcr.io/lmnr-ai/app-server