Deploy Langflow [Updated Feb '26]

Langflow [Feb '26] (LLM Workflow Builder, Flowise alternative) Self Host

langflow

Just deployed

Just deployed

/var/lib/postgresql/data

Deploy and Host Managed Langflow with One Click on Railway

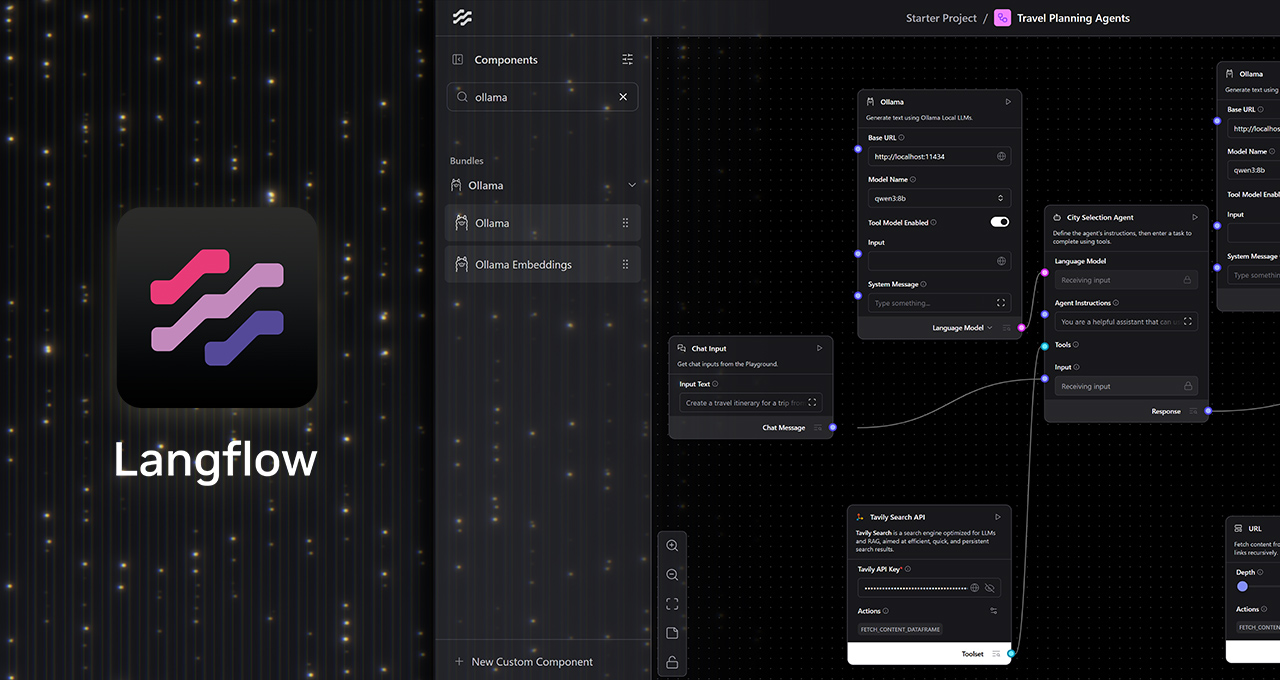

Langflow is a powerful, open-source visual programming tool for building AI workflows. It allows you to design, test, and deploy large language model (LLM) applications without complex coding. Whether you are experimenting with AI agents, automating tasks, or prototyping new applications, Langflow gives you a drag‑and‑drop canvas to connect components together, making AI development faster and easier. In this guide, we’ll cover everything you need to know about Langflow self host deployments, including Langflow features, pricing, alternatives, and how to run it on Railway with a single click.

About Hosting Langflow on Railway (Self Hosting Langflow on Railway)

When you self host Langflow, you get full control over your workflows, data, and environment. Hosting Langflow on Railway eliminates the pain of manual server setup. Railway automatically provisions secure containers, manages scaling, and lets you deploy Langflow instantly. By running Langflow on Railway, you can:

- Build AI workflows visually without coding.

- Connect external APIs, vector databases, or custom Python logic.

- Test your apps in real time on a managed platform. Unlike traditional hosting where you configure every server detail, Railway streamlines the process so you can focus on building your Langflow projects instead of maintaining infrastructure.

Why Deploy Managed Langflow Service on Railway

Deploying Langflow on Railway provides the best balance of flexibility and simplicity. Here’s why:

- Effortless Setup: One‑click deployment saves hours of manual configuration.

- Automated Scaling: Railway auto‑scales Langflow as your workloads increase.

- Simplified Maintenance: No need to patch, monitor, or manage servers.

- Full Control: Even though Railway handles infrastructure, you still fully control your Langflow workflows.

Railway vs DigitalOcean

DigitalOcean requires you to manually install Docker, configure ports, and set up persistent storage for Langflow. With Railway, all of that is automated, so you can launch Langflow in minutes.

Railway vs Linode

Linode gives you VPS control but expects you to handle OS updates, networking, and security. Railway automates these tasks, allowing you to focus on building Langflow workflows.

Railway vs Vultr

Vultr requires hands‑on disk management and monitoring. Railway automates scaling and gives you an intuitive interface to run hosted Langflow easily.

Railway vs AWS Lightsail

AWS Lightsail is powerful but adds complexity with IAM roles, manual scaling, and networking rules. Railway simplifies this by giving you direct deployment pipelines for Langflow.

Common Use Cases for Langflow

Langflow is not just a sandbox, it’s a serious tool for AI development. Here are 5 common use cases:

- Chatbots and AI Agents: Build custom chatbots powered by GPT models and integrate them with APIs.

- Workflow Automation: Connect APIs and logic to automate repetitive business processes.

- Knowledge Retrieval (RAG): Combine vector databases with LLMs for advanced question‑answering systems.

- Prototyping AI Apps: Rapidly design and test new ideas with a drag‑and‑drop builder.

- Teaching and Learning: Perfect for learning how LLMs and AI workflows operate without writing full codebases.

Dependencies for Langflow Hosted on Railway

To host Langflow on Railway, you typically need:

- Python Runtime: Langflow is a Python‑based application.

- Docker Support: Railway runs Langflow in Docker containers.

- Database: Optionally connect to vector databases like Pinecone, Weaviate, or PostgreSQL with pgvector.

Deployment Dependencies for Managed Langflow Service

A managed Langflow deployment comes pre‑configured with:

- Secure containerized environment.

- Prebuilt Docker images for Langflow.

- Automatic logs and monitoring through Railway.

Implementation Details for Langflow Workflows

When deploying Langflow, you may set environment variables for API keys, database URLs, and authentication tokens. For example:

OPENAI_API_KEY– to connect with GPT models.DB_CONNECTION_STRING– to connect with external databases.LANGFLOW_PORT– the port your Langflow instance listens on.

How does Langflow Compare to Other Platforms?

Langflow sits at the intersection of no‑code tools and AI orchestration. Let’s see how it compares to alternatives:

Langflow vs LangChain

LangChain is a Python framework that requires coding. Langflow provides a visual UI built on top of LangChain, making it easier to design workflows.

Langflow vs Flowise

Flowise is another visual LLM orchestration tool. Langflow offers a larger set of components and integrations, with a cleaner UI and growing community support.

Langflow vs Airflow

Apache Airflow is for general workflow orchestration (data pipelines). Langflow focuses specifically on AI workflows with native LLM support.

Langflow vs Bolt DIY

Bolt DIY helps you build apps via chat interfaces. Langflow is more focused on visual drag‑and‑drop workflows for AI orchestration.

Langflow vs Hosted SaaS Tools

Unlike closed SaaS AI builders, Langflow being open‑source means you can download Langflow, inspect the code on Langflow GitHub, and fully control your hosting setup.

How to Use Langflow

Using Langflow is simple:

- Deploy Langflow – Either self host or run on Railway.

- Open the Langflow UI – Access the drag‑and‑drop interface in your browser.

- Build Workflows – Add nodes for models, prompts, memory, tools, and APIs.

- Run and Debug – Execute workflows in real time, inspect logs, and adjust parameters. Langflow provides a visual way to test LLM applications without writing long Python scripts.

How to Self Host Langflow on Other VPS Providers

If you prefer not to use Railway, you can self host Langflow manually:

Clone the Repository

git clone https://github.com/logspace-ai/langflow.git

cd langflow

Install Dependencies

Make sure you have Python 3.9+, Node.js, and Docker installed.

pip install -r requirements.txt

Configure Environment Variables

Create a .env file and set variables like OPENAI_API_KEY, LANGFLOW_PORT, etc.

Start Langflow with Docker

docker-compose up --build

Access Langflow Dashboard

Visit http://localhost:7860 (or the port you configured) in your browser.

This gives you a self‑hosted Langflow instance, but requires manual setup and maintenance. Railway removes all of this complexity.

Features of Langflow

Langflow offers a rich set of features for AI workflow development:

- Visual Workflow Builder – Drag‑and‑drop interface for LLM apps.

- LangChain Integration – Built directly on top of LangChain.

- Multiple Model Support – Use OpenAI, Anthropic, Hugging Face models, and more.

- Vector Database Connectors – Integrations for Pinecone, Weaviate, ChromaDB, pgvector.

- Reusable Components – Prompts, chains, agents, and memory modules.

- Export/Import Workflows – Share projects easily across teams.

- Cross‑Platform Support – Works on Linux, macOS, and Langflow Windows.

Official Pricing of Langflow

Langflow itself is open‑source and free to use. However, hosting Langflow may involve costs depending on your platform:

- Railway Pricing: Starts around $5–$10 per month for hosting Langflow, with additional charges for databases and scaling.

- Other VPS Pricing: DigitalOcean, Linode, or Vultr typically start at $5/month but require more setup effort. If you use external APIs like OpenAI, you’ll also pay for token usage separately.

Self Hosting Langflow vs Paid Managed Services

- Self Hosting Langflow: Free, full control, but requires manual setup and updates.

- Managed Langflow on Railway: Slight monthly cost, but automated scaling, updates, and one‑click deployment.

Monthly Cost of Self Hosting Langflow on Railway

A typical Railway Langflow deployment costs $5–$15 per month, depending on usage and whether you add a managed PostgreSQL database for vector storage.

FAQs

What is Langflow?

Langflow is an open‑source, visual programming tool for building AI workflows with drag‑and‑drop simplicity.

How do I self host Langflow?

You can self host Langflow using Docker or deploy it directly on Railway for one‑click setup.

What are the key features of Langflow?

Key Langflow features include a visual workflow builder, model integrations, vector database support, reusable components, and cross‑platform compatibility.

How do I deploy Langflow on Railway?

Click the Deploy Now button on Railway, connect your GitHub repo or use the Langflow template, set environment variables, and Railway handles the rest.

Can I use Langflow on Windows?

Yes, Langflow works on Linux, macOS, and Langflow Windows environments.

Where can I download Langflow?

You can download Langflow directly from the official Langflow GitHub repository.

What are common Langflow workflows?

Common Langflow workflows include chatbots, RAG pipelines, knowledge assistants, and API‑powered automation chains.

Is there Langflow documentation?

Yes, the official Langflow documentation is available on GitHub, including guides for setup, components, and workflow examples.

How much does Langflow cost?

Langflow itself is free. Hosting costs vary depending on your platform. Langflow pricing on Railway typically starts around $5/month.

Template Content