Deploy Letta [Updated Feb ’26] (Open-Source AI Agent Framework & Orchestration Platform)

Letta [Feb ’26] (Build, Deploy & Manage AI Agents Seamlessly) Self Host

letta/letta:latest

Just deployed

/data/postgresql

Deploy and Host Letta AI Service with one click on Railway

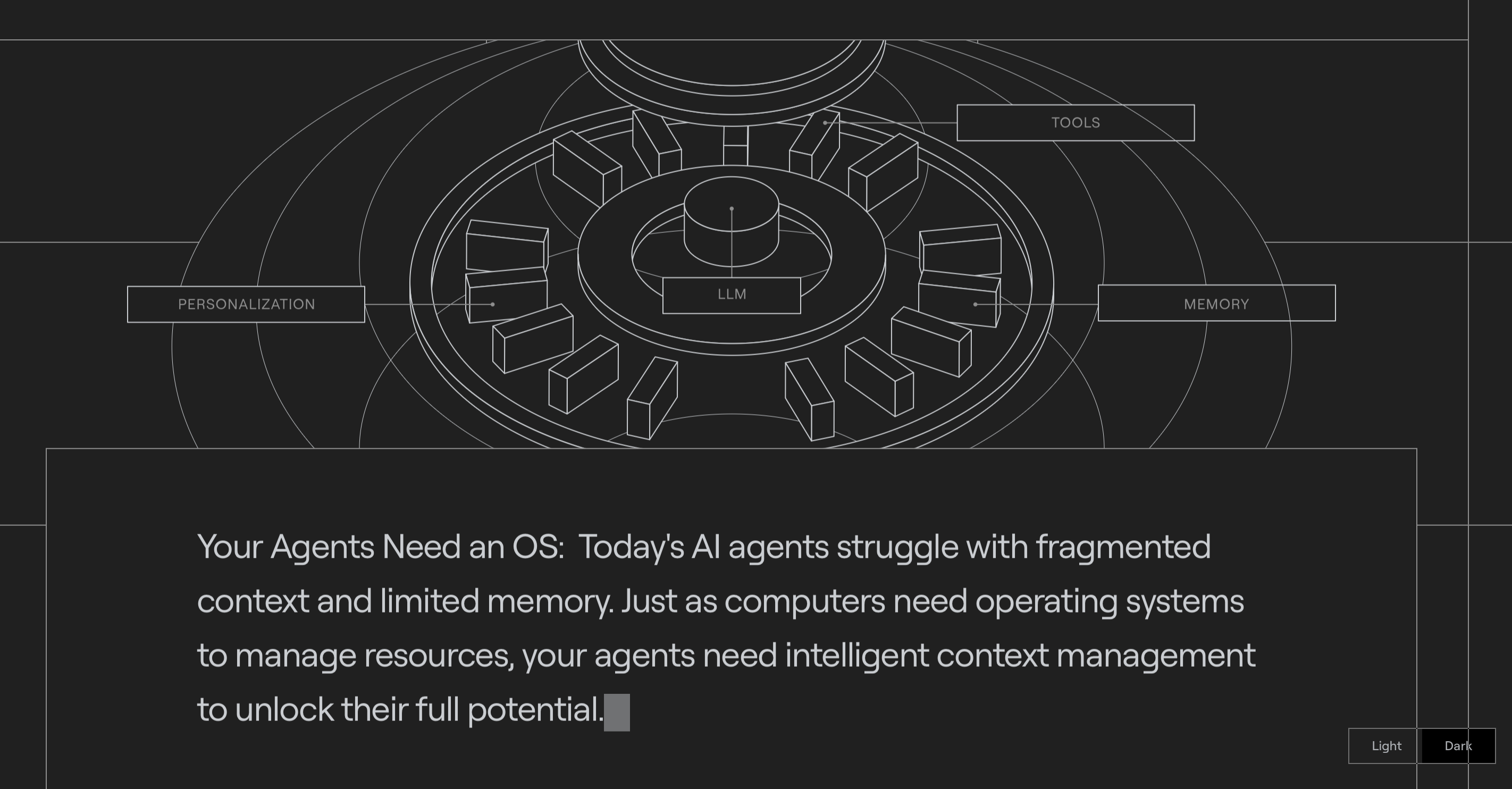

Letta is an open-source, voice and chat AI assistant framework designed for developers and organizations who want to create their own intelligent assistants. Unlike closed-source AI services that store your data on external servers, Letta offers full transparency, self-hosting capabilities, and privacy-first design.

Why Deploy Managed Letta Service on Railway

Deploying a managed Letta service on Railway gives you automation, scalability, and simplicity. Instead of setting up servers, managing dependencies, or maintaining uptime, you can deploy Letta instantly with one click. Railway handles continuous updates, security, and scaling while you focus on training your assistant and integrating it into your apps.

Railway vs DigitalOcean:

DigitalOcean requires manual configuration, installing dependencies like Node.js, Python, and databases before deploying Letta. Railway, however, provides a one-click deployment with pre-built environments and automated scaling - saving hours of manual setup.

Railway vs Linode:

Hosting Letta on Linode involves managing storage, firewall rules, and OS-level configurations. Railway automates these steps with managed containers, ensuring that Letta runs smoothly with zero downtime or manual tuning.

Railway vs AWS Lightsail:

AWS Lightsail users often face complex networking and IAM permission setups. Railway simplifies this by providing direct deployment pipelines and instant domain setup for your Letta instance.

Railway vs Hetzner:

Hetzner provides affordable VPS solutions but expects users to handle everything - from system security to process monitoring. Railway manages all of this automatically, letting you deploy Letta securely and focus purely on building and training your assistant.

Common Use Cases for Letta

-

Personal AI Assistant: Host Letta to build your personal chatbot or voice assistant that can manage notes, tasks, or answer questions.

-

Customer Support Automation: Deploy Letta to respond to customer queries on your website, powered by your preferred language model.

-

Internal Knowledge Assistant: Connect Letta to company data for employees to query documents, policies, and project information instantly.

-

AI Voice Interface: Integrate Letta with speech APIs for a voice-controlled experience in IoT devices or apps.

-

Educational Bots: Create personalized tutors using Letta that explain topics, generate quizzes, or help students learn interactively.

Dependencies for Letta hosted on Railway

To host Letta on Railway, you typically need:

-

Node.js runtime

-

A database (PostgreSQL or Redis for state management)

-

API keys for your chosen LLM (OpenAI, Anthropic, or similar)

-

Environment variables like

OPENAI_API_KEY,DATABASE_URL, etc.

Deployment Dependencies for Managed Letta Service

Managed Letta service on Railway automatically provisions compute resources, database connections, and secure environment variables. No need for manual installations - Railway’s deployment flow handles dependency resolution and service linkage.

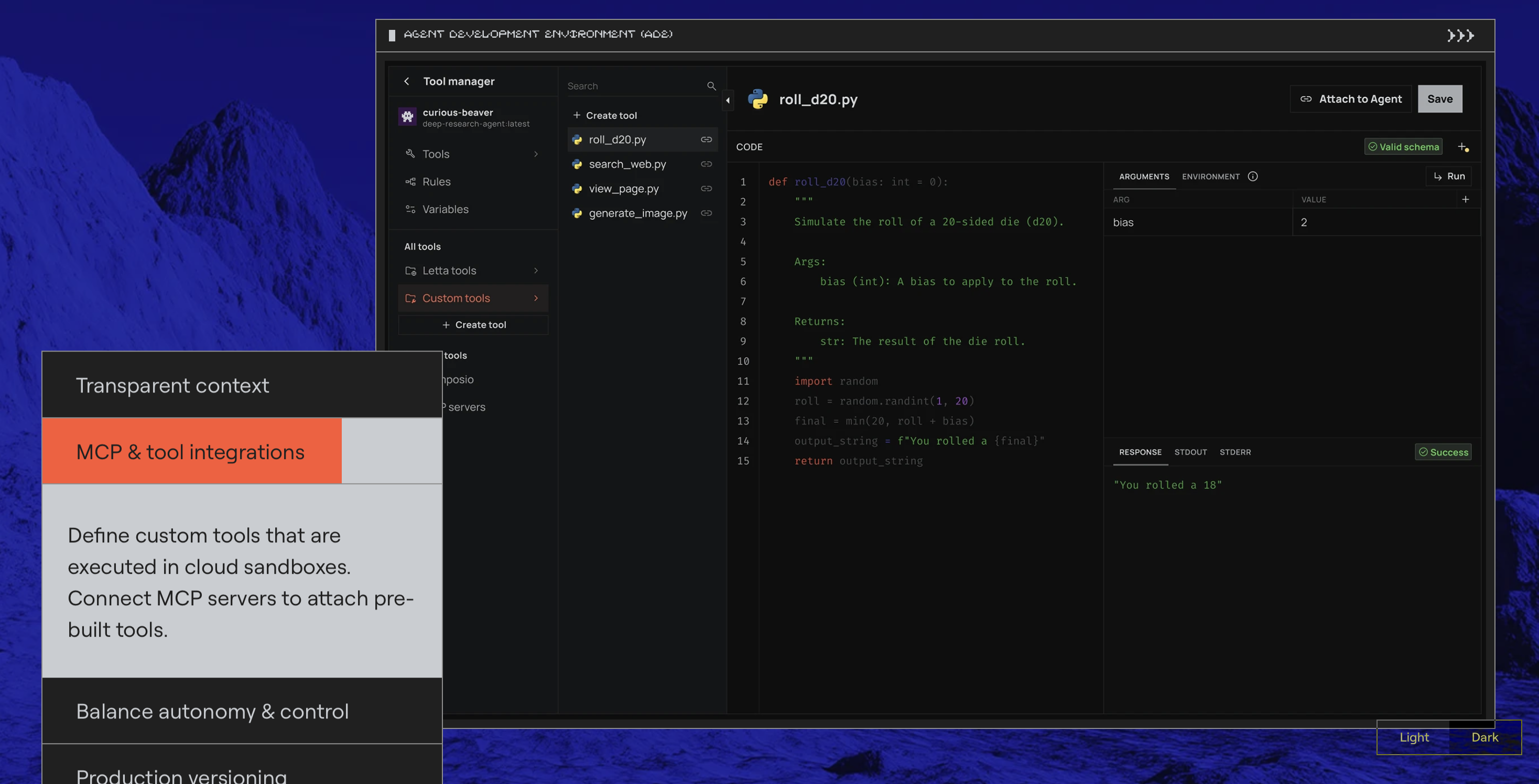

Implementation Details for Letta (AI Assistant Framework)

When deploying Letta, configure essential environment variables such as:

-

OPENAI_API_KEY– Connects your assistant to an AI model. -

DATABASE_URL– Manages assistant state, user data, and logs. -

PORT– The network port where the app runs. -

NODE_ENV– Defines the environment (production/development).

Once deployed, you can access the Letta dashboard or API endpoints directly via your Railway-provided domain.

How does Letta compare to other AI assistant frameworks

Letta vs Open Assistant

Letta focuses on developer customization and local privacy, while Open Assistant is more community-driven with shared datasets. Letta offers a simpler integration pipeline and privacy-first model connections.

Letta vs Rasa

Rasa is primarily NLP-based and best for rule-driven conversational AI. Letta, on the other hand, leverages LLMs like GPT or Claude for natural, generative responses while supporting API-level extensibility.

Letta vs Botpress

Botpress provides a GUI-based workflow builder, while Letta is more developer-centric - perfect for coders who prefer direct control through APIs and custom logic.

Letta vs HuggingChat

HuggingChat depends on Hugging Face servers and models. Letta lets you self-host and connect to any model, giving full control of data and scalability.

How to use Letta

- Deploy Letta: Click the one-click Railway deploy button.

- Set Environment Variables: Add API keys (like OpenAI) and database URLs.

- Access the Dashboard: Railway gives a live URL to access your Letta instance.

- Create Assistant: Define your assistant’s personality and behavior.

- Integrate with Apps: Connect to your website, Slack, Discord, or custom apps using Letta APIs.

Once configured, Letta can manage sessions, remember context, and respond intelligently - powered by your chosen AI model.

How to Self Host Letta on Other VPS

Clone the Repository

Clone from GitHub: https://github.com/letta-ai/letta.git

Install Dependencies

Ensure your VPS has Node.js (v18+), npm, and PostgreSQL.

Configure Environment Variables

Set the following before starting:

OPENAI_API_KEY=your_api_key

DATABASE_URL=your_database_url

PORT=3000

Start the Letta Application

Run npm install then npm start to start the app.

Access the Dashboard

Visit your public server IP or domain to use Letta’s web interface. With Railway, you can skip all these steps. Just click “Deploy Now” and your Letta instance will be live in minutes.

Deploy Now!

Features of Letta

- Open-source and self-hostable AI assistant framework.

- Supports both chat and voice assistant capabilities.

- Integrates with multiple LLMs including OpenAI, Anthropic, and Mistral.

- Customizable memory, persona, and behavior settings.

Official Pricing of Letta (Open Source Framework)

Letta is completely free and open-source. There are no licensing fees or restrictions on usage. Your only cost comes from hosting and API usage (like OpenAI tokens or database hosting).

Self Hosting Letta vs Paid AI Assistant Services

Self-hosting Letta is completely free (except hosting and API usage), giving you full privacy, control, and customization. Paid AI services (like Dialogflow or ChatGPT API integrations) cost monthly fees and restrict data ownership.

Monthly Cost of Self Hosting Letta on Railway

A basic Railway plan runs Letta at $5–$10/month, making it far cheaper than enterprise chatbot services that start at $30–$100/month.

System Requirements for Hosting Letta

- Node.js v18+

- PostgreSQL or Redis for persistence

- At least 512MB RAM (recommended: 1GB+)

- 1 vCPU minimum (auto-scaled by Railway)

FAQs

What is Letta?

Letta is an open-source framework for creating AI assistants (chat or voice) that can connect to large language models like GPT or Claude, giving full control to developers.

How do I self host Letta?

You can self-host Letta by deploying it on Railway using the one-click deploy template or setting it up manually on any VPS with Node.js and PostgreSQL.

What are the benefits of hosting Letta on Railway?

Railway automates setup, scaling, and updates. You just click deploy, add environment variables, and Letta is live - no manual configuration required.

Is Letta free to use?

Yes, Letta is open-source and free to use. You only pay for hosting and optional AI API usage (like OpenAI tokens).

Can I connect Letta to my own OpenAI key?

Yes, Letta supports direct connection to your OpenAI, Anthropic, or Mistral API keys through environment variables.

How secure is Letta when self hosted?

Letta offers full control over data - no third-party logging. When deployed on Railway, it runs in isolated, encrypted environments.

Can Letta integrate with other apps?

Yes, Letta supports REST APIs and WebSocket connections for integrating with web apps, Slack, Discord, and IoT devices.

How much does it cost to host Letta on Railway?

Hosting typically costs around $5–$10/month depending on Railway’s plan and your database size.

What are Letta’s main features?

Custom AI personalities, long-term memory, voice integration, REST APIs, privacy-first design, and open-source flexibility.

What makes Letta better than closed AI services?

Letta gives you full data ownership, open customization, and freedom to choose your model - unlike closed platforms that lock you into subscriptions

What programming languages can I use to interact with Letta?

Letta provides REST and WebSocket APIs, which means you can use any language that supports HTTP requests or WebSocket connections - such as Python, JavaScript, Java, C#, or Go - to communicate with your assistant.

Can I train Letta on my own datasets?

Yes, Letta supports custom training using vector databases or external APIs. You can connect Letta to your organization’s internal knowledge base, product documentation, or user data to make it context-aware.

Does Letta support multilingual interactions?

Absolutely. Letta supports multilingual prompts and responses through the underlying LLM. By integrating with OpenAI or Anthropic APIs, your assistant can interact in languages like English, Spanish, French, German, Hindi, and more.

Template Content

letta/letta:latest

letta/letta:latestLETTA_SERVER_PASSWORD

Password to enter the server