Deploy Ollama + OpenWebUI

[Feb '26] Deploy open-source LLMs with a simple, private web interface.

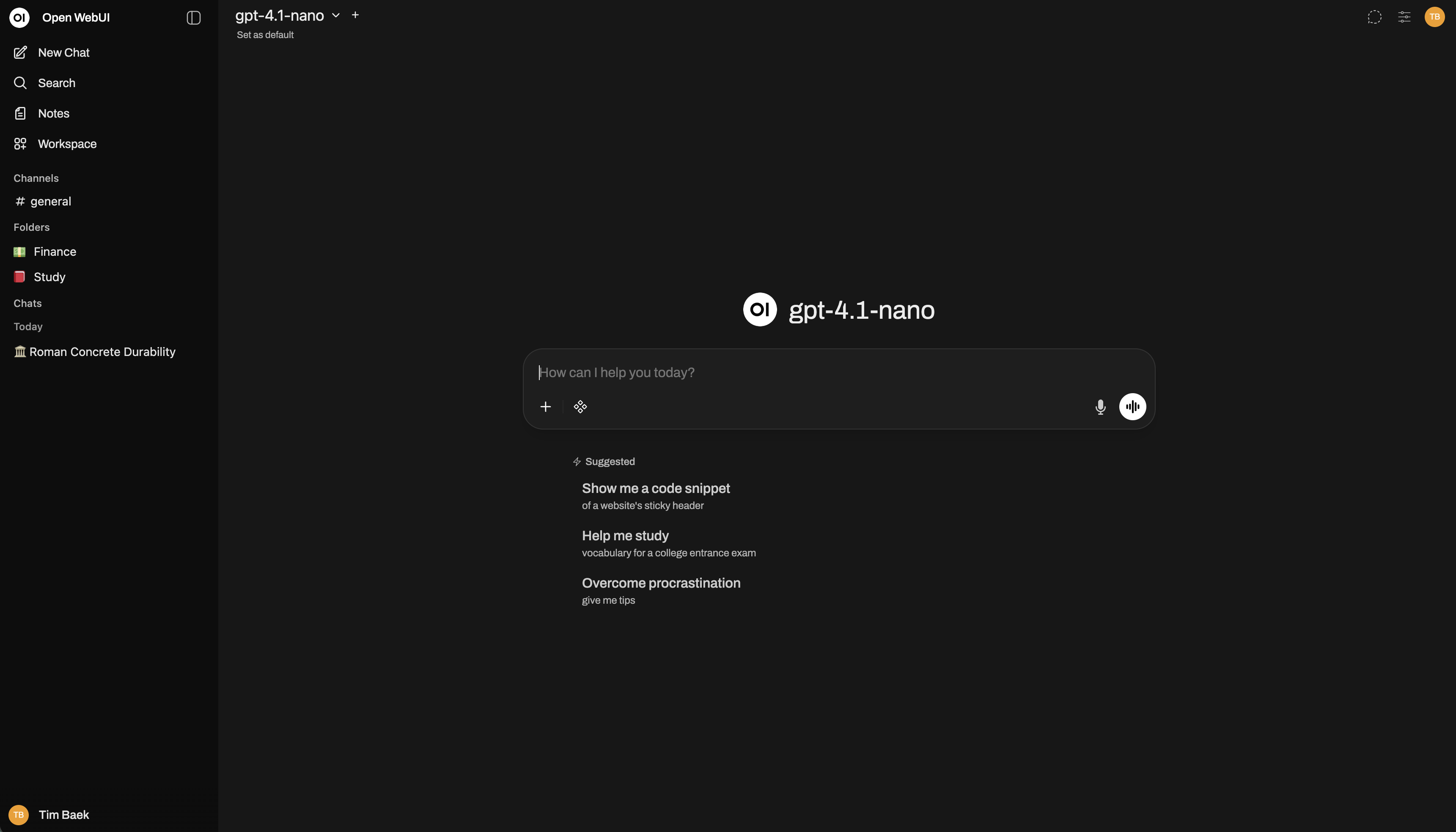

Open-WebUI

Just deployed

/app/backend/data

Ollama

Just deployed

/root/.ollama

Deploy and Host Ollama + OpenWebUI | Self-Host Your Own ChatGPT on Railway

Ollama + OpenWebUI lets you run and chat with open-source LLMs like Llama 3, Mistral, or Gemma — all on your own infrastructure.

This template deploys a ready-to-use stack on Railway with Ollama as your model server and OpenWebUI as your visual chat interface — no setup, no Docker commands, no friction.

About Hosting Ollama + OpenWebUI | Self-Host Your Own ChatGPT

This one-click Railway template gives you a private, fully self-hosted ChatGPT alternative.

It runs Ollama (model backend) and OpenWebUI (browser chat interface) in a single cloud project, already networked together through Railway’s private environment variables.

Everything is pre-configured — from ports and URLs to security keys — so you can focus on using the models, not wiring the infrastructure.

Once deployed, you get:

- A hosted Ollama API (

http://ollama.railway.internal:11434) - A public WebUI URL for chatting with your models

It’s ideal for creators, developers, or teams who want local AI control with cloud simplicity.

How to Use This Template

- Click “Deploy Now” on Railway.

Within a minute, both Ollama and OpenWebUI will be live.

- Visit the public WebUI URL shown in your Railway dashboard.

- Login for the first time — you’ll be asked to set a username and password (this becomes your admin account).

- You’re now inside your Chat Space — start chatting instantly with your default model or add new ones.

🔧 Adding or Modifying Models

- Go to Profile → Admin Panel → Settings → Models.

- Click Manage Models on the top right.

- You’ll already see your Ollama endpoint prefilled as:

http://ollama.railway.internal:11434 - Pull any model you like from the Ollama Model Library.

(Make sure your instance has enough RAM to fit the model.) - Return to the WebUI homepage — your new model will appear on the top left.

✅ That’s it! You can now chat, test, and prototype AI ideas right in your browser.

⚙️ System Requirements

Hosting Ollama + OpenWebUI doesn’t need heavy infrastructure to get started — but your hardware (or Railway plan) should match the model size you plan to use.

Here’s a quick guide:

| Resource | Minimum Recommended | Notes |

|---|---|---|

| CPU | 2 vCPUs | For smooth model inference and WebUI responsiveness. |

| RAM | 4 GB (small models) | Models like Llama 3 (8B) may need 8–16 GB or more. |

| Disk / Volume | 10 GB+ | Model weights are stored under /root/.ollama. Larger models = more storage. |

| Network | Stable connection | Required for model downloads and API interactions. |

💡 Tip: Resource needs grow with model size.

For example:

phi3:miniormistral:7b→ ~4–6 GB RAMllama3:8b→ ~8–10 GB RAMllama3:70b→ 30+ GB RAM (not ideal for small instances)

Storage note:

Ollama downloads and stores model files in /root/.ollama. In Railway, this maps automatically to your project’s persistent volume so models stay even after redeploys.

Performance tip:

Ensure your service has at least 2 GB RAM to avoid crashes while chatting or switching models.

🧩 Best Practice: Deploy Ollama and OpenWebUI Separately

While this template runs Ollama + OpenWebUI on the same instance for simplicity,

the recommended setup is to deploy them as separate Railway services.

Why Separate?

- Performance: Heavy model loads won’t slow down the UI.

- Scalability: Scale Ollama independently for larger models.

- Flexibility: Connect multiple UIs (e.g., Flowise, LM Studio) to the same Ollama backend.

- Reliability: Keeps redeploys and crashes isolated.

How to Connect

In your OpenWebUI service, add this variable:

OLLAMA_BASE_URL="http://${{Ollama.RAILWAY_PRIVATE_DOMAIN}}:11434"

This links the WebUI to your Ollama instance securely over Railway’s private network.

💡 Tip: You can reuse the same Ollama backend across other tools like Flowise or n8n — just point them to the same internal URL.

Common Use Cases

- 🧠 Private AI Chatbot: Host your own ChatGPT-style assistant without sending data to third-party APIs.

- ⚙️ LLM API Sandbox: Use Ollama’s REST API for testing LangChain, Flowise, or custom agent setups.

- 🧩 AI Prototyping Environment: Quickly experiment with models, prompts, and workflows for R&D or content generation.

Dependencies for Ollama + OpenWebUI | Self-Host Your Own ChatGPT Hosting

- Ollama — runs and serves LLMs through an API endpoint.

- OpenWebUI — connects to Ollama and provides an intuitive web chat interface.

Key Environment Variables

| Variable | Description |

|---|---|

OLLAMA_BASE_URL | Internal API endpoint for Ollama (http://${{Ollama.RAILWAY_PRIVATE_DOMAIN}}:11434). |

CORS_ALLOW_ORIGIN | Enables cross-origin requests for OpenWebUI. |

WEBUI_SECRET_KEY | Used by OpenWebUI to secure sessions and logins. |

ENABLE_ALPINE_PRIVATE_NETWORKING | Keeps communication between services private within Railway. |

Cost and Hosting Notes

Railway offers $5 of free monthly credits for all new users — enough to host this stack comfortably for exploration and small projects.

You can upgrade anytime for more compute or storage as your usage grows.

This setup runs entirely on Railway’s managed cloud, but you retain the self-hosting benefits:

- Full control over your data

- Bring-your-own models

- Easy migration to your own server if needed

If you prefer local deployment, Ollama can also be self-hosted with Docker:

docker run -d -v ollama:/root/.ollama -p 11434:11434 ollama/ollama

Then connect OpenWebUI locally with:

OLLAMA_BASE_URL=http://localhost:11434

Alternatives to Ollama

If you’re exploring open LLM backends, consider these realistic options:

- LM Studio — A GUI-first local model runner that’s perfect for non-technical users who want a desktop chat experience.

- LocalAI — An OpenAI-compatible, self-hostable inference engine designed for backend deployments and drop-in API replacement.

- Text Generation WebUI — A highly customizable local web UI (oobabooga) for power users; great for plugins, LoRA, and advanced model tuning.

Why choose Ollama? Ollama strikes a nice balance between ease-of-use, lightweight performance, and programmatic access (CLI + REST API), making it ideal for developers who want a simple production-capable model server.

Alternatives to Railway

If you’re weighing deployment platforms, here are common alternatives and how they compare:

- Railway vs Render — Very user-friendly, good for web apps and managed services; fewer prebuilt templates compared to Railway.

- Railway vs Fly.io — Geographically distributed deployments and more control over instance types; requires more infra familiarity.

- Railway vs Vercel / Netlify — Excellent for frontends and serverless functions, but not focused on stateful services like model servers or databases.

Railway’s sweet spot: rapid one-click templates, built-in private networking, persistent volumes, and an accessible developer experience — especially handy for composing small AI stacks (Ollama + WebUI + Postgres).

❓ FAQ

1. Is Ollama free to use?

Yes — Ollama is free and open-source. You only pay for the cloud resources (Railway credits/plan) when you deploy.

2. What are the minimum requirements to host Ollama?

A small model can run on ~4–8 GB RAM; larger models need more. On Railway, start with the default tier and scale up if you pull bigger models.

3. Can I use this template for free on Railway?

Railway provides initial credits (usually $5) for new users — enough for light experimentation. Upgrade when you need more runtime or storage.

4. How does this differ from running Ollama locally?

Railway removes local setup: no Docker, no port mapping, and automatic networking. Local hosting gives you total control and no recurring cloud cost.

5. Does Ollama include a web UI?

Ollama itself is CLI/API-first. This template pairs Ollama with OpenWebUI to provide a browser-based chat interface.

6. How do I connect Flowise, LangChain, or other tools?

Point your tool to the Ollama API URL on Railway:

http://${{Ollama.RAILWAY_PRIVATE_DOMAIN}}:11434

or use the public domain if you enabled it:

https://${{Ollama.RAILWAY_PUBLIC_DOMAIN}}/api

Deployment Dependencies

- Ollama Official Docs — https://docs.ollama.com/

- Ollama Docker (Hub) — https://hub.docker.com/r/ollama/ollama

- OpenWebUI (GitHub) — https://github.com/open-webui/open-webui

- Ollama Model Library — https://ollama.com/library

- Railway Docs (templates, env, volumes) — https://docs.railway.app/

Why Deploy Ollama + OpenWebUI | Self-Host Your Own ChatGPT on Railway?

Railway is a singular platform to deploy your infrastructure stack. Railway will host your infrastructure so you don't have to deal with configuration, while allowing you to vertically and horizontally scale it.

By deploying Ollama + OpenWebUI | Self-Host Your Own ChatGPT on Railway, you are one step closer to supporting a complete full-stack application with minimal burden. Host your servers, databases, AI agents, and more on Railway.

Template Content

Open-WebUI

ghcr.io/open-webui/open-webuiOllama

ollama/ollama