Deploy Open Source LLM Models

[Feb '26] Self host open LLMs + connect your ChatGPT Account

ollama

Just deployed

/root/.ollama

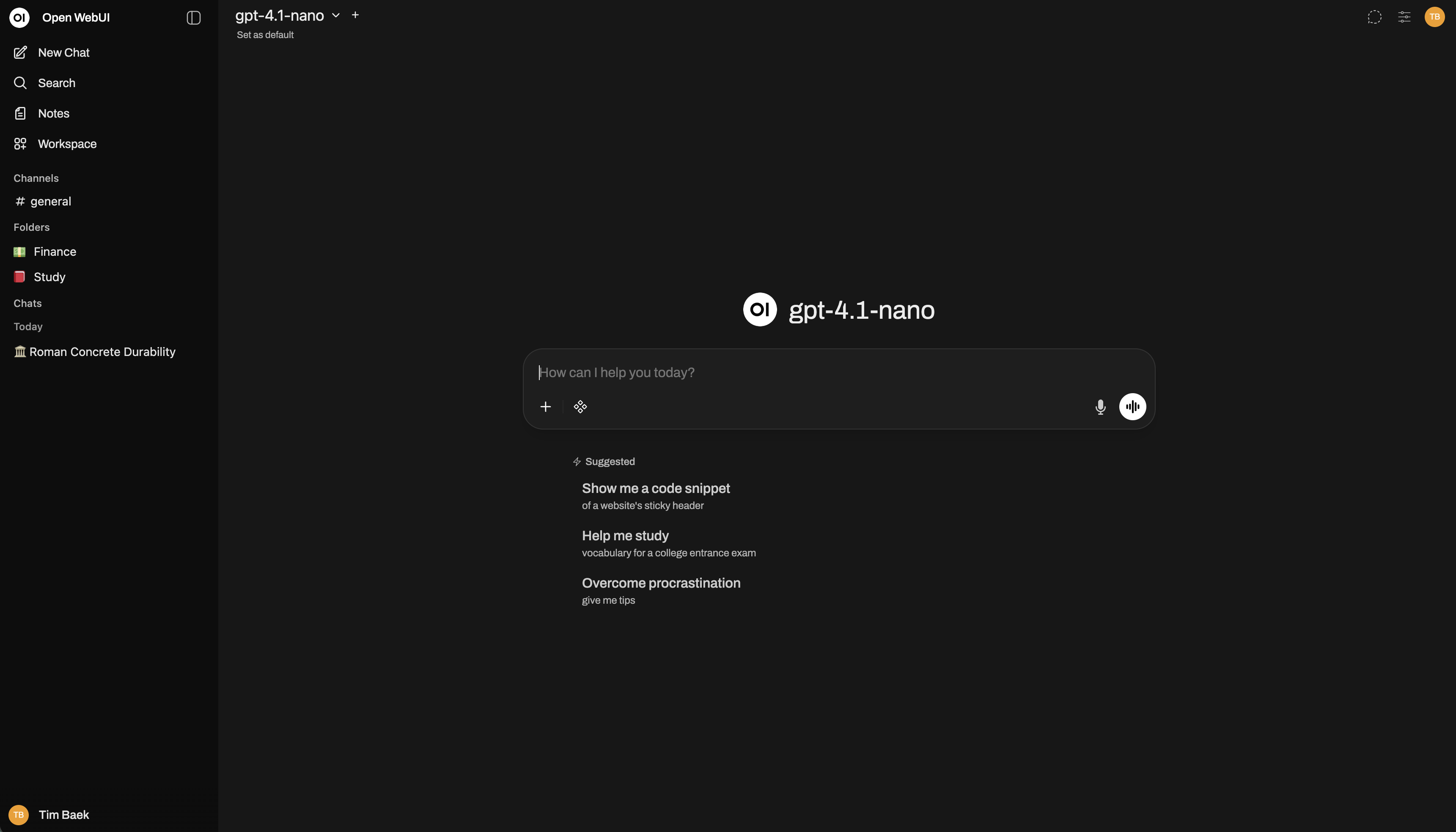

Open WebUI

Just deployed

/app/backend/data

Deploy and Host Open Source LLM Models on Railway

This Railway template gives you a simple setup for running open‑source LLMs. It provides an Ollama model server, a browser UI, persistent storage for models, and optional OpenAI support. Everything is connected and ready to use right after deployment.

About Hosting Open Source LLM Models

Hosting open‑source LLMs normally involves downloading models, configuring storage, setting up networking, and linking a UI. This template handles those steps for you. It starts Ollama, downloads the models you list, connects the UI, and uses a persistent volume for storage. You can adjust model choices and scale your instance whenever required.

Configuring Environment Variables

OLLAMA_DEFAULT_MODELS

You can specify a comma-separated list of models from https://ollama.com/library. These models will automatically download at startup.

- Example:

OLLAMA_DEFAULT_MODELS="llama3:8b,mistral:7b" - If you're new, leave this as the default lightweight model.

- You can change this anytime and redeploy.

- Make sure your system has enough RAM and disk space if you choose large models.

OPENAI_API_KEY

This is optional. If you add your OpenAI key:

- You can chat with any OpenAI model directly from the UI.

- Includes GPT‑4o and other models not available on the free OpenAI tier.

- If left empty, the system works purely with open-source models.

System Requirements (Important)

- CPU: 2 vCPUs minimum

- RAM: 4GB+ for small models; 8–12GB recommended for 7B–8B models

- Disk: 10GB+ depending on downloaded models

- Network: Required only for first-time model downloads

Common Use Cases

- Personal AI assistant or research companion

- Private ChatGPT-style workspace

- Testing open-source models before committing to heavier deployments

- Running small agents, automations, and quick prototypes

- Combining OpenAI models with open-source models in one UI

Dependencies for Open Source LLM Models Hosting

This template includes:

- Ollama as the open-source model backend

- OpenWebUI as the chat interface

- Railway persistent volumes to store downloaded models

- Optional OpenAI integration through

OPENAI_API_KEY

Deployment Dependencies

- Sufficient RAM depending on the models you choose

- Enough disk storage in the attached volume for model weights

Implementation Details

- Models are stored under

/root/.ollamaon your Railway volume. - Updates to

OLLAMA_DEFAULT_MODELStrigger new model downloads on redeploy. - OpenWebUI auto-discovers available models from the connected Ollama backend.

Why Deploy Open Source LLM Models on Railway?

FAQ

1. How do I choose which open-source models to run?

Set OLLAMA_DEFAULT_MODELS with the models you want. Start with a small model if you’re unsure. You can update this anytime and redeploy.

2. How much RAM do I need for common models?

Small models run on about 4GB RAM. Models in the 7B–8B range typically need 8–12GB. If a model won’t load, the instance likely lacks enough memory.

3. Where are models stored after downloading?

Models are saved in /root/.ollama on your Railway volume. They stay there across restarts and redeploys.

4. Can I run multiple models at once?

You can store many models as long as there’s disk space. Running several at the same time requires enough RAM to load each simultaneously.

5. Can I use OpenAI and open-source models together?

Yes. Add your OPENAI_API_KEY and the UI will include OpenAI options alongside your local models.

6. What happens if I leave the OpenAI key empty?

You’ll only see open-source models. All functionality remains the same.

7. What if my instance doesn’t have enough disk space?

Large models can be several gigabytes each. If a download fails, increase your Railway volume size and redeploy.

8. Can I switch models later without breaking anything?

Yes. Update the environment variable or pull new models from the UI. The template picks them up automatically.

9. Can external tools connect to this setup?

Yes. Use the internal Ollama endpoint to connect Flowise, LangChain, LM Studio, or your own scripts.

10. Is this suitable for beginners?

Yes. The template handles setup and connectivity. You only choose the models and optionally add an OpenAI key.

Railway is a singular platform to deploy your infrastructure stack. Railway will host your infrastructure so you don't have to deal with configuration, while allowing you to vertically and horizontally scale it.

By deploying Open Source LLM Models on Railway, you are one step closer to supporting a complete full-stack application with minimal burden. Host your servers, databases, AI agents, and more on Railway.

Template Content

ollama

ollama/ollamaOpen WebUI

ghcr.io/open-webui/open-webui