Deploy Open WebUI on Railway

[Mar '26] OpenWebUI | Self-hosted AI interface. Connect with any LLM model.

Open-WebUI

Just deployed

/app/backend/data

Deploy and Host Open-WebUI on Railway

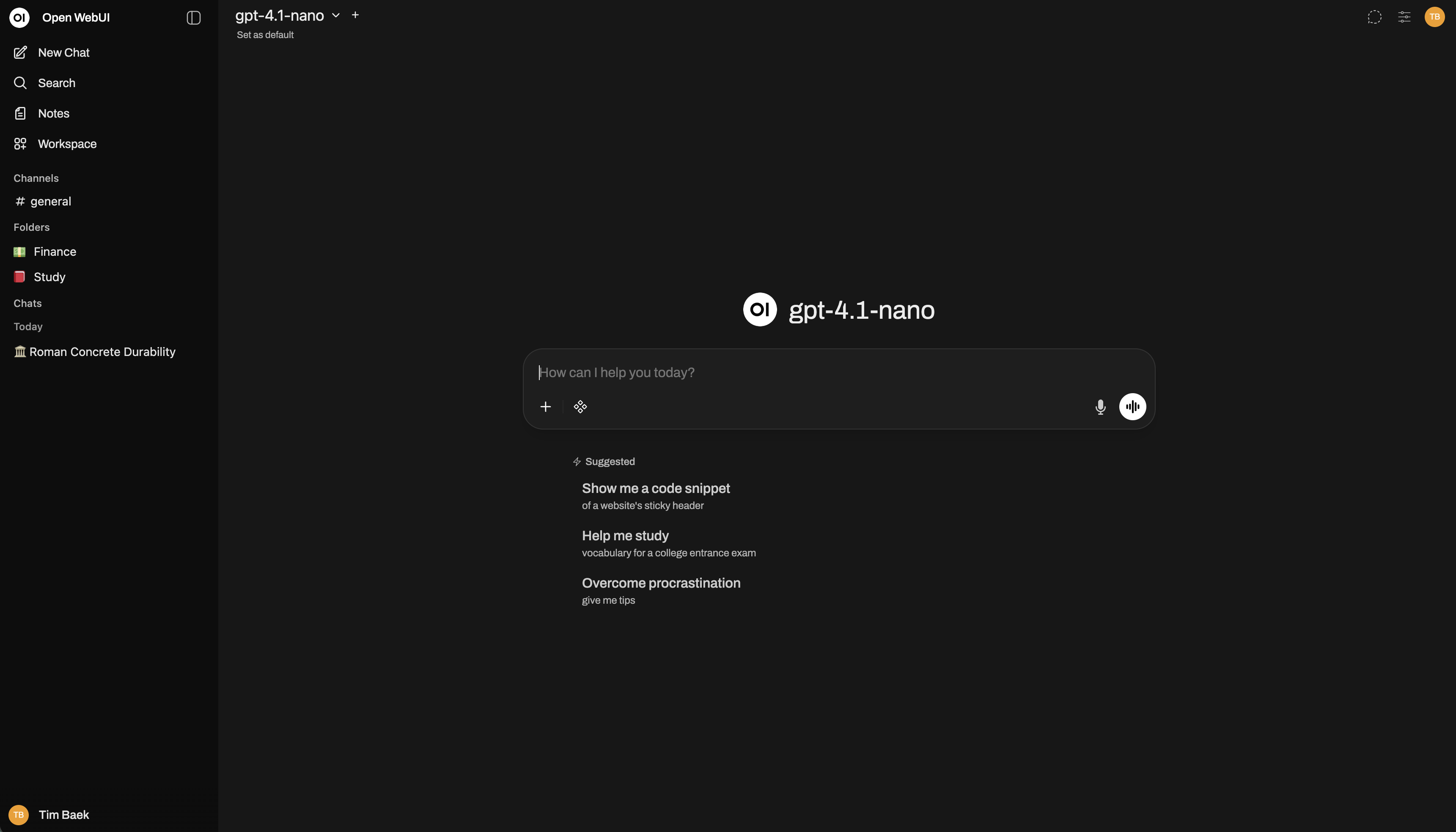

Open WebUI is an open-source, self-hosted chat interface for large language models (LLMs). It provides a sleek, ChatGPT-like experience for interacting with models such as Ollama, OpenAI, or Anthropic—complete with RAG workflows, document uploads, and multi-user authentication.

About Hosting Open-WebUI

Hosting Open WebUI gives you a private, production-ready interface for running and managing AI chat applications. With this template, you can deploy Open WebUI to Railway in a single click—no manual Docker setup or infrastructure provisioning required.

Railway automatically builds, configures, and exposes your instance with HTTPS, persistent volumes, and environment variables pre-wired for external LLM backends such as Ollama or OpenAI APIs. You’ll have a secure, always-on chat UI that scales seamlessly as your workload grows.

Common Use Cases

- Building an internal, privacy-focused AI assistant for teams

- Hosting your own ChatGPT-style interface connected to Ollama or OpenAI

- Rapid prototyping and testing of multi-model conversational workflows

- Providing clients or stakeholders with a white-labeled GenAI chat interface

Dependencies for Open-WebUI Hosting

- Railway Account – to deploy and manage your project

- Open WebUI Repository – the official GitHub source (Docker-based)

- Optional LLM Backends – e.g., Ollama for local inference or OpenAI API for cloud models

Deployment Dependencies

- Official Open-WebUI GitHub Repo

- Railway Docs

- Ollama Installation Guide (optional backend)

How to Use Open-WebUI

Once deployed, visit your Railway-generated URL and sign up as the first admin user.

From the settings panel, connect your preferred model backend:

- For Ollama, set

OLLAMA_BASE_URLto your local or remote instance. - For OpenAI, add your

OPENAI_API_KEY.

You can then upload documents, build RAG workflows, or chat directly with multiple models—all within your secure Railway deployment.

What Is the Cost of Using Open-WebUI?

Open WebUI itself is 100% free and open-source under its own permissive license.

Hosting on Railway follows your Railway plan:

- Free Tier – ideal for personal testing and light usage.

- Paid Plans – recommended for persistent, always-on deployments with higher memory and storage requirements.

For most small projects, the total cost remains under a few dollars per month.

Open-WebUI Alternatives

| Platform | Quick Summary |

|---|---|

| LibreChat | More user-friendly UI and multi-provider chat support. |

| AnythingLLM | Document-centric RAG platform with workspace management. |

| LobeChat | Beautiful UI, plugin store, and multimodal capabilities. |

| BionicGPT | Enterprise-grade alternative with RBAC and compliance tooling. |

Frequently Asked Questions (FAQs)

1. Is Open WebUI safe to use?

Yes. It’s self-hosted, meaning your data and API keys remain private within your Railway environment.

2. Can I connect multiple models?

Absolutely. Open WebUI supports multiple backends including Ollama, OpenAI, Anthropic, and custom APIs.

3. Does it support RAG or document-based Q&A?

Yes. You can upload PDFs, text, or markdown files to enable retrieval-augmented generation.

4. Do I need a GPU?

Not necessarily. You can use remote APIs (OpenAI, Anthropic, Mistral). For local inference, host Ollama separately on a GPU VM.

5. Can I customize the interface?

Yes. It’s built with a modular front-end—branding, theming, and extensions are supported.

Implementation Details (Optional)

If you prefer manual configuration, include this minimal .env snippet before first launch:

ENV=prod

PORT=8080

WEBUI_AUTH=True

WEBUI_SECRET_KEY=

ENABLE_OLLAMA_API=True

OLLAMA_BASE_URL=https://ollama.example.com

DATA_DIR=/data

Why Deploy Open-WebUI on Railway?

Railway is a singular platform to deploy your infrastructure stack. Railway will host your infrastructure so you don't have to deal with configuration, while allowing you to vertically and horizontally scale it.

By deploying Open-WebUI on Railway, you are one step closer to supporting a complete full-stack application with minimal burden. Host your servers, databases, AI agents, and more on Railway.

Template Content

Open-WebUI

ghcr.io/open-webui/open-webui